How PAPER Magazine’s web engineers scaled their back-end for Kim Kardashian (SFW)

#BreakTheInternet?

On November 11th 2014, the art-and-nightlife magazine PAPER “broke the Internet” when it put a Jean-Paul Goude photograph of a well-oiled, mostly-nude Kim Kardashian on its cover and posted the same nude photos of Kim Kardashian to its website (NSFW). It linked together all of these things—and other articles, too—under the “#breaktheinternet” hashtag.

There was one part of the Internet that PAPER didn’t want to break: The part that was serving up millions of copies of Kardashian’s nudes over the web.

Hosting that butt is an impressive feat. You can’t just put Kim Kardashian nudes on the Internet and walk away —that would be like putting up a tent in the middle of a hurricane. Your web server would melt. You need to plan.

#Don’tBreakThisPartPlease

During the first week of November the PAPER people got in touch with the people who run their website, and said:

“We may get a lot of traffic.”

PAPER is an arts, culture, celebrity, and nightlife magazine, very woven into the culture of New York City after 30 years in existence. It has had a website for about 20 of those years. It’s neither a big nor a small magazine — its monthly readership, including its website, is 650,000 people, according to its media kit, and it claims more than 100,000 subscribers.

The person at PAPER who did the getting-in-touch is named Jamie Granoff. She manages their sites, web advertising, and production — as well as coordinating events and providing support on the print magazine.

The company that keeps the papermag.com website running is called 29th St. Publishing. It’s in New York City. (Disclosures: I worked with this company a few years ago and people who work there are my friends. I wrote this article because the subject interests me, and because they did a crazy thing well in a short time. PAPER gave me permission to talk with them about the project and I have no financial interest in their work.)

Back to that phone call—“a lot of traffic”: At first, David Jacobs, the founder of 29th St. Publishing, was unconcerned. When the people at PAPER do events or big ad campaigns, he told me via chat, “there’s very often a request like this.” He reasoned that the PAPER site was designed to handle a couple million people per month no problem, and it’s been chugging along for years.

“And Jamie’s like, ‘well, we need a call,’” said Jacobs, “and was like ‘I love you guys! I am happy to have a call! But…fine, we’ll have a call.’”

Jacobs was surprised that David Hershkovits, one of the founders of PAPER, was on the line—not typical for a conversation about web architecture. It was then that he learned that the feature would include a nude Kardashian, butt exposed.

“It’s her real butt,” said Hershkovits.

“And that stuck with me for some reason,” said Jacobs. “Like, what else would it be?”

He added: “But now that I understand the project, I see why that’s important.”

Eep

Jacobs contacted Greg Knauss. Knauss lives in California, and is the “owner, operator and intern” at Extra Moon, LLC, which is a contractor for 29th St. Publishing and for other companies and organizations. While Jacobs does the product management and scheduling, Knauss keeps the servers running.

Via email, Jacobs told Knauss that PAPER believed “they’ve got something that they think will generate at least 100 million page views, and will their current infrastructure support that?”

“This sort of cold thrill goes down my spine,” Knauss said, “and the only thought that makes it out of my brain is, ‘Eep.’”

He continued: “I reflexively begin designing the architecture in my head. It’s a nerd impulse. Dogs chase after thrown balls, system administrators design to arbitrary traffic.”

Jacobs began working with PAPER to keep the process moving. Knauss set to work, not knowing exactly what he was building. “I started work on the 6th” of November, he said. The site would go live on Tuesday, the 11th.

Scaling: It depends

You might ask: Isn’t the web cheap and fast and isn’t it easy to set up a website and get a zillion readers? The answer, as with everything in technology, is: It depends. Not all websites or web services are built the same way.

Let’s use, and terribly abuse, a transportation metaphor:

A typical website—for example a WordPress blog that you install on your own web server—is like a car that you might buy at a dealership. You can customize the color and add a new stereo, but it’s just a regular car underneath and there are tons of people who can fix it if it breaks. It doesn’t cost much to run, and you drive it yourself.

A publisher’s website like PaperMag.com needs some customization. It’s like a tour bus for a musical group. It’s basically a regular bus, but it has been designed for their needs. It might have a big TV and a small recording studio plus a fridge. The band doesn’t operate their own bus. Instead, they pay people to drive the bus, keep it clean and free of bugs, and keep it from breaking down. In this case PAPER plays the songs; 29th St. Publishing operates the bus.

If you’re a massive web platform, like Facebook or Twitter—or even BuzzFeed—you’re like an airplane. You’re staffed by professionals at all times. Everything has to work perfectly because if something breaks down the whole thing falls from the sky. The equipment is always at risk of breaking, is incredibly expensive, and requires constant monitoring. But you can move an enormous number of people very quickly.

What PAPER was asking was: Can you take a website that we use to reach hundreds of thousands of people in a given month and turn it into a website that can reach tens of millions of people or more? Or rather, “can you turn our tour bus into an airplane for a week or two?”

Gluing the jets on the bus

I asked Knauss to run down how PaperMag.com worked, from the bottom to the top, before he started to prepare for the Kardashian photos. Here’s what he told me. (This part is pretty nerdy and filled with product names and acronyms, but it’s also representative of how billions of web pages are hosted in the world every day.)

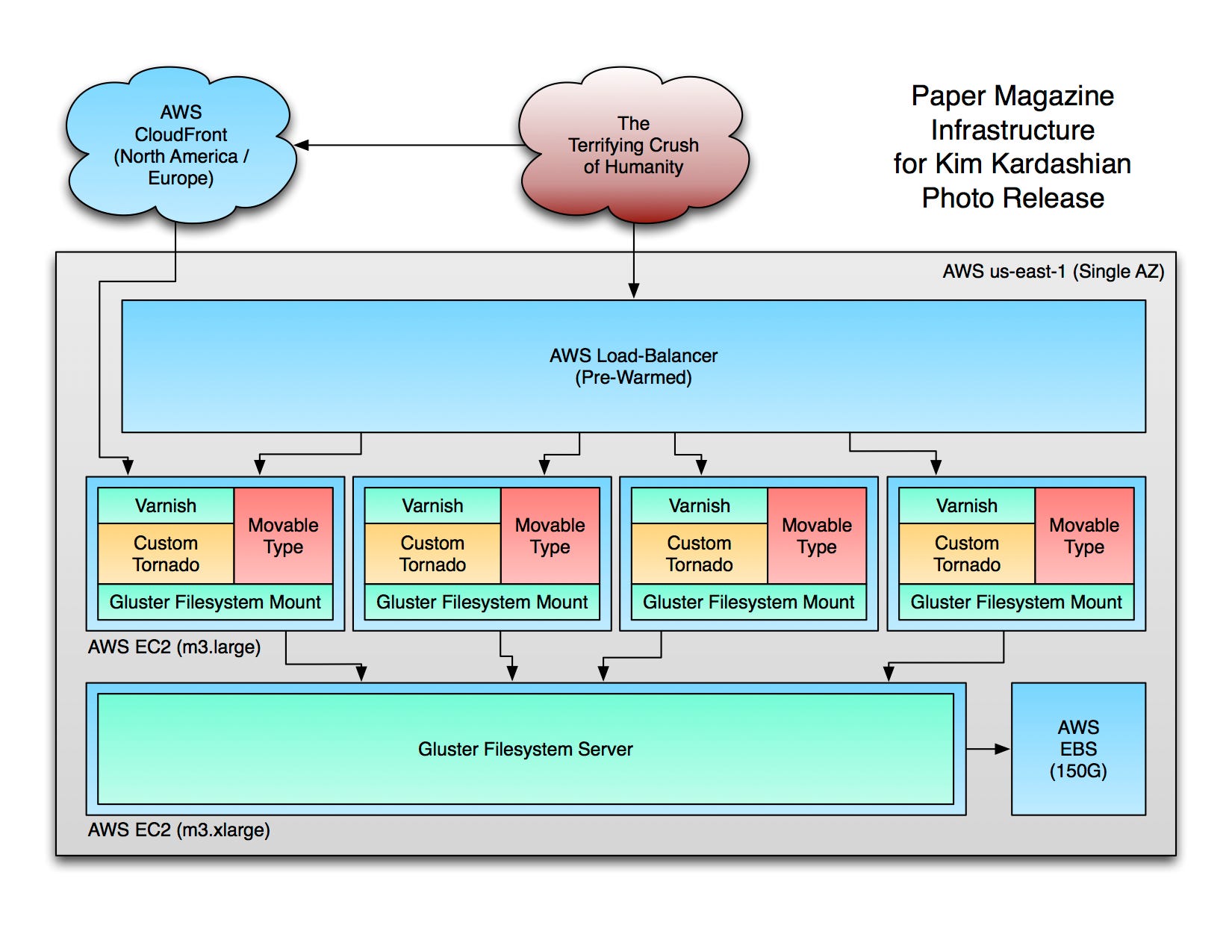

- First, the whole thing is hosted on Amazon Web Services or AWS. Amazon (the same Amazon that sells diapers and books) offers a ton of very different, very specific services that you can turn on with the push of a button (a virtual, web button). It has hundreds of thousands of computers that you can lease by the moment to just be computers, to serve as databases, to make files available on the web, and so forth. AWS is one of the major fabled “clouds” of the new world of cloud computing.

- There’s a database that Amazon runs, that is specific to PAPER’s needs. It’s just a regular old relational database. Data goes in, data comes out.

- In addition to that database, PAPER leased a single computer from Amazon (which might be a real computer in-a-box and might be a virtual “slice” of another, bigger computer—it’s not our place to know). This machine serves between a half-million and a million unique visitors a month.

- On that particular computer, which is a server running the Linux operating system, there were a few server programs (servers running on servers, it is what it is). These are programs that were always running and waiting for people to come and ask them for things. There was a version of Movable Type, a venerable web content management system (CMS). An editor logs into Movable Type to do things—to create a new article, edit the article, fix typos, upload pictures, and so on. The CMS saves your articles in a database (see above) where they can be stored, indexed, and listed. Later—moments later, years later—someone might come by and ask for a web page, and the CMS might take that same data out of the database and make a web page to show the article, or a home page made of many, many articles.

- But not in this case! CMSes are great at creating and storing content but they are rarely super-flexible when it comes to pulling data out of a database and making pages. Thus data takes a more complex path to get to magazine readers. In this case, Movable Type saves out all the information that is relevant to a web page in a common format (JSON, for the curious). A specialized, highly configurable web server called Tornado reads that data, and applies some custom code that was written several years ago by a programmer named Natalie Podrazik, who was the CTO of 29th St. Publishing. That code chews the data a bit, then turns it into web pages by using templates—web page scaffolding, basically, that says “put the headline here” and “put the article here.”

- But we’re not done! In front of all of this is a cache, called Varnish. When you come and ask for a page on PaperMag.com, Varnish checks to see if it has that page baked and ready to serve. If it does, it sends it over the Internet and your web browser lets you see it. If it doesn’t, it goes back and asks the Tornado server for the page. The Tornado server makes up the page by loading the data from Movable Type and the templates, mashing them together. That goes out to you on the Internet, and Varnish saves a copy for the next person who comes along. Every now and then, Varnish will throw away the version it’s keeping because it knows that websites change.

And that’s how a web page is made and sent to a user—somewhere in complexity between making a baby and creating a new government. Repeat the processes above a couple hundred thousand times and you’ve got the PaperMag.com website on a typical month. (I’ve left out a ton of stuff, of course. Websites are complex beasts.)

Stacked

Note how the technologies are stacked on top of each other? Software architects call a whole set of linked technologies like this a “stack.” (The word “stack” also refers to a way of storing and accessing items in a computer’s memory, because nothing has one meaning in technology.)

One of the things nerds love to do is look at other people’s stacks and say, “what a house of cards!” In fact I fully expect people to link to this article and write things like, “sounds okay, but they should have used Jizzawatt with the Hamstring extensions and Graunt.ns for all their smexing.”

Here’s what I think: We are rebuilding our entire culture and economy on top of computers, which are crazy light switches that turn on and off a billion-plus times per second.

You can describe what a given computer might do in a given circumstance using math, but there are billions of computers running at all times now, all chatting with each other using different versions of software. So it’s chaos. Everything is a house of cards. You can point at the house of cards and laugh, or you can sit down at the poker table, grab the cards, and deal.

Preparing for Kim; or: cache rules everything around me; or: teaching a bus to fly

No matter how slick Varnish can be at caching pages, one computer was not going to be enough. The first thing Knauss did was get a big honking server to run on the Amazon cloud, with a large hard drive. He copied all the images and files from the smaller original web server to the new, big server. Then he installed a piece of software called Gluster, which allows many computers to share files with each other—it’s sort of like a version of Dropbox that you can completely control. This way Knauss could bring up new servers and they would all have a common filesystem ready to go.

Moving a well-understood, well-documented website from one computer to another is actually not that disruptive. What’s disruptive is changing the server code that makes the web pages. Knauss’s goal was to change as little as possible about the core PaperMag.com—to leave the code that Podrazik had written years ago entirely alone. “Software changes are ratholes,” he reasoned. “And fiddling with them will introduce problems that you won’t find until 100 million people are using it.”

With the Gluster server running, Knauss added another computer: One more big Amazon server. He called this the “front-end server.” This server talked to the big one running Gluster. It also did all the things that the old server did: It ran Movable Type, Varnish, and Tornado.

Knauss added three more servers exactly like that one, for a total of four front-end servers. These machines all shared the same big virtual disk, thanks to Gluster. If one of the front-end machines died, another one would take its place. So there were five really big computers instead of one little one—all working like the little one.

Now Knauss needed to figure out three more things. First, how to balance the requests for web pages among the four servers. Second, he needed a way to distribute the pictures. And finally, he needed to test the whole shebang. He’d strapped a jet engine onto a tour bus, but it lacked wings. Plus no one had tried to fly it yet.

“Late Wednesday night,” he said, “I had trouble sleeping, and got up early Thursday. At this point, I knew it was going to be some sort of dramatic picture of Kim Kardashian and I was sort of sick with worry.” He tweeted this on Thursday morning:

He got in touch with Amazon (they offer good support when you pony up) to set up an “Elastic Load Balancer.” A load balancer takes a web request and portions that request off to a given machine; the next request might go to a different machine. This way you can keep any given machine from getting overwhelmed and spread traffic around. This allows you to scale a service “horizontally,” by adding machines, instead of “vertically,” by using a more powerful machine.

Then: “It’s Friday,” Knauss said, “and I’ve got a pre-warmed load balancer, four identical front-end servers, and a server to share a filesystem between them.” Now he used another Amazon service, Cloudfront. (Amazon really does do everything, which is both inspiring and terrifying). Cloudfront is a CDN, or “Content Delivery Network” — a big, global, distributed cache service. These services take content and make it widely available—they might even distribute it around the world so that it can be downloaded quickly by people where they live; the Internet is fast, sure, but geography still matters.

CloudFront worked by continually making copies of everything on PaperMag.com. Now Knauss had:

- Five computers pretending to be one giant computer, all behind a load balancer.

- And a CDN hosting the images, which take up a lot of space and aren’t dynamic at all, totally separate from the giant computer.

So how do things come together to make a web page? Well, you let the web browser assemble all these different parts of a website.

This is how many of the largest websites work: A page like that of the New York Times or BuzzFeed is not the product of a single machine. Your browser gets an HTML page and then it goes off on a bunch of goose chases to download pictures, video thumbnails, logos, and ads. It might talk to one, two, or even dozens of servers. All these things come hurling down to the pike and throw themselves against your screen like bugs on a windshield. And that’s how web pages are made.

Knauss just had to change the server code so that anywhere it pointed to a picture, it pointed to the same picture, but coming from the CDN. “The one tweak I made to the actual code,” he explained, was to swap out the domain pointers—to change “http://www.papermag.com” to “http://cdn.papermag.com” in one configuration file.

Other than that, the code that ran the website remained unchanged throughout the entire process.

Bees Bees Bees Bees Bees Bees Bees Bees

Bees Bees Bees Bees Bees Bees Bees

Bees Bees Bees Bees Bees Bees

Bees Bees Bees Bees Bees

Bees Bees Bees Bees

Bees Bees Bees

Bees Bees

Bees

The photo release was imminent. He needed to simulate what would happen when thousands of people began to demand their naked Kardashian picture at once. Knauss chose a tool called Bees with Machine Guns, originally created by the Chicago Tribune. When you run it, it brings up a whole bunch of new computers in the Amazon cloud and makes them attack your website. This way you can test scenarios like, “what if 2000 people are using the site in any given ten-second period.” Bees runs the test and gives a verdict, like “The swarm destroyed the target,” “The target successfully fended off the swarm,” and so forth.

But no matter what he did (and with the clock ticking), Knauss couldn’t get the servers to host more than 2,000 requests per second. He was pounding the website, but only 25% as much as he wanted to pound it. Finally—he seemed a little tired as he explained this—he realized that the problem was not with his system but with the way he was using the testing apparatus; it couldn’t reach the volume he needed.

So he evaluated each of the various pieces of the system and determined that they would work well in concert. “I should be able to handle 8,000 requests a second,” he concluded. “Which is 500,000 a minute. Which is almost 30 million an hour.”

He determined that he was indeed ready for 30 million people to come by in an hour, should that occur. He also had a document that would let him bring up a new front-end server in a few minutes, if he needed to host 60 million, 90 million, 120 million users in the course of an hour. There were ways to automate the process, of course—tools with names like Puppet or Chef—but he decided against them given the time constraints. “In a crunch,” he said, “I’d rather rely on cut-and-paste from a Google Doc than software.”

Up Goes the Butt

“So I’m actually at my little league team’s practice,” said Knauss, “when I get the e-mail that the first photos have gone live. Oh, crap! Is it that late? I run home — by which I mean drive while sweating — and bring up [the performance monitoring tool] ‘top’ on each of the front-end servers.”

While the global digital cultural apparatus is typically in a state of eruption, this was a Krakatoan explosion of opinions about Kardashian, her body, the female body in general, the male gaze, buttock proportionality, reality television, consumerism, feminism, racism, the media, female and male sexuality, nudity, privilege, motherhood, and Kanye West. These are the things that the Internet fights about every day, but now it fought about them in relation to Kim Kardashian’s naked body, linking back to the pictures that PAPER was publishing.

Except, traffic-wise, it was a little underwhelming. Tons of people were coming to the site, but the four big front-end servers were running without any issues, using about 10% of their capacity. Kardashian had tweeted out the news — but pointed to the photo on her Instagram account. Her 25 million followers went there instead of to PAPER’s website.

I asked Knauss: “Could you have done it with just one?”

“Yes,” he answered. That held until the next day, when PAPER published a full-frontal nude shot of Kardashian. Kardashian couldn’t post this on her Instagram feed—Instagram policy allows buttocks but prohibits pudenda. So she pointed everyone to PAPER.

Now, said Knauss, “traffic is insane.” The servers were working steadily at five times the pace of before, and the CDN was delivering images. The system worked. Nothing choked. Nothing broke down. PAPER got its traffic—30 million unique visitors over a few days, they said via email. The Internet did not break, but it bent. (Although their stock installation of Google Analytics, which tracks the traffic to the site, melted for a day and a half.)

And a few weeks later they took it all down and returned things to normal.

“Hardware isn’t the thing that doesn’t scale anymore,” said Knauss. “It’s attention.” He concluded, cheerfully: “I think I speak for everybody who does this kind of work, I’m just glad I could help — in some small way — to bring more thinkpieces into the world.”

I asked him what he thought about Kim Kardashian, her role in culture, her nude images on the website.

“I wish,” he said, “I had that kind of confidence.”