How Netflix works: the (hugely simplified) complex stuff that happens every time you hit Play

Not long ago, House of Cards came back for the fifth season, finally ending a long wait for binge watchers across the world who are interested in an American politician’s ruthless ascendance to presidency. For them, kicking off a marathon is as simple as reaching out for your device or remote, opening the Netflix app and hitting Play. Simple, fast and instantly gratifying.

What isn’t as simple is what goes into running Netflix, a service that streams around 250 million hours of video per day to around 98 million paying subscribers in 190 countries. At this scale, providing quality entertainment in a matter of a few seconds to every user is no joke. And as much as it means building top-notch infrastructure at a scale no other Internet service has done before, it also means that a lot of participants in the experience have to be negotiated with and kept satiated — from production companies supplying the content, to internet providers dealing with the network traffic Netflix brings upon them.

This is, in short and in the most layman terms, how Netflix works.

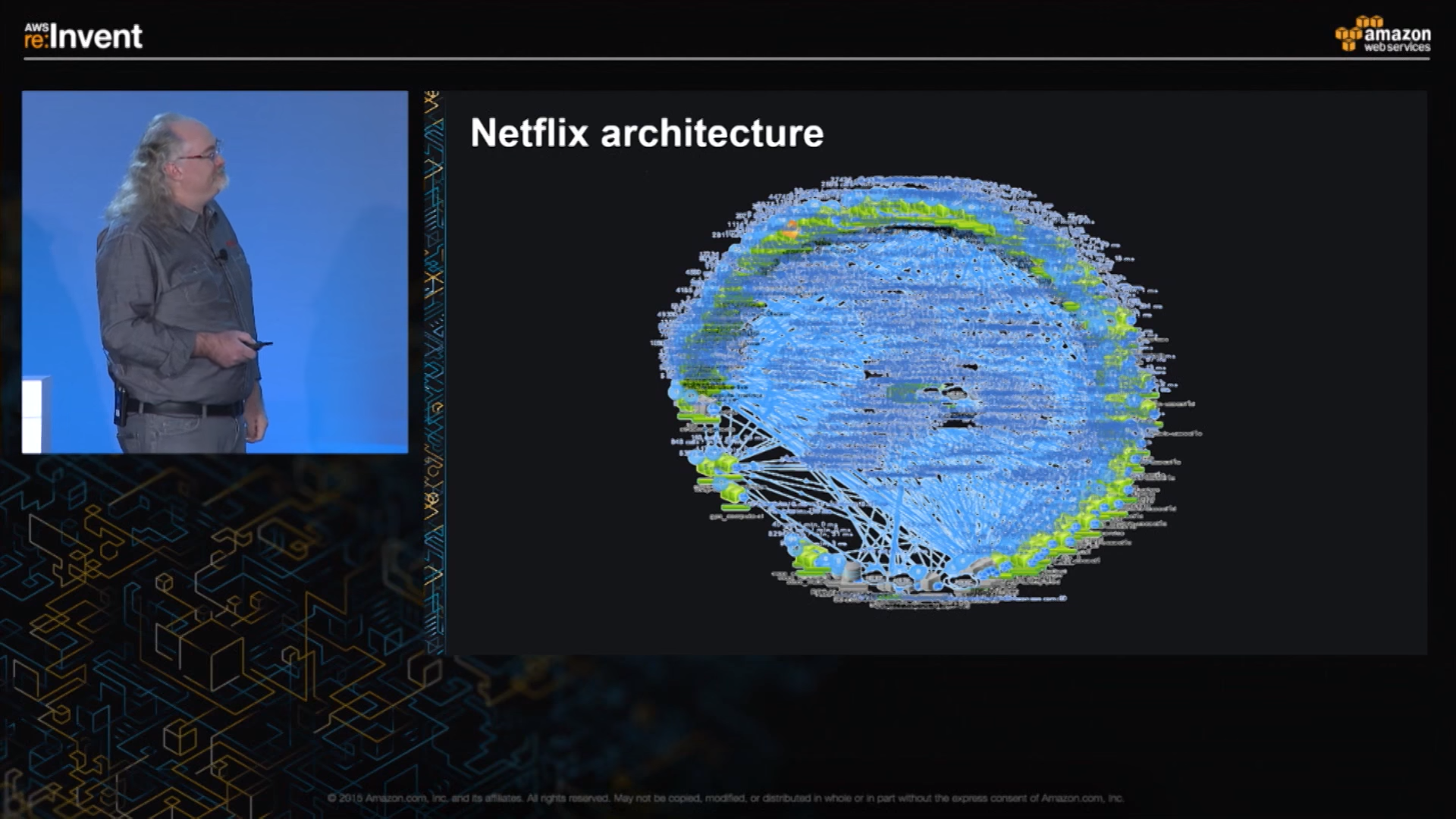

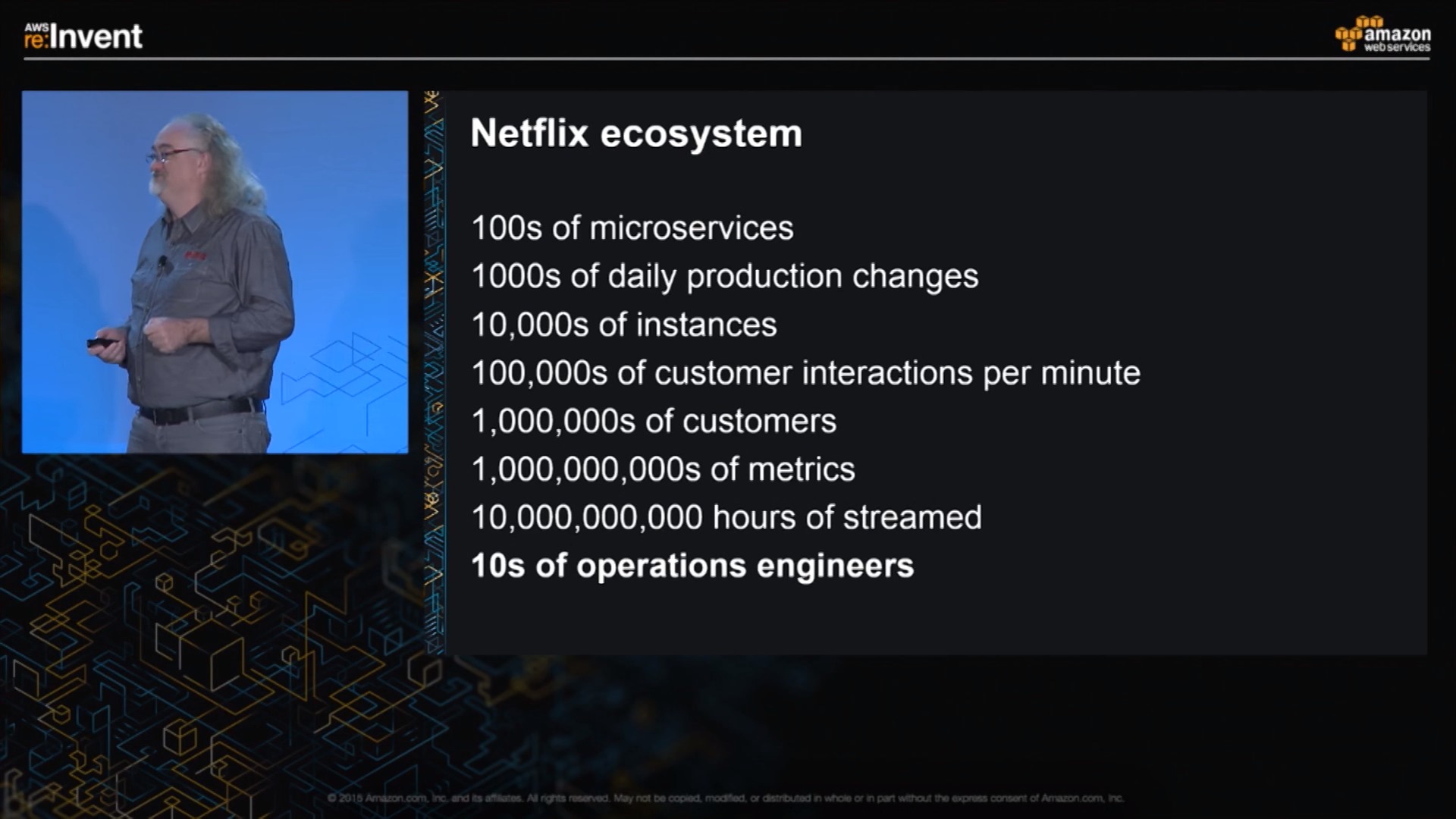

Hundreds of microservices, one giant service

Let us just try to understand how Netflix is structured on the technological side with a simple example.

Let’s just assume that the Maps app on your phone tracks your location all the time and saves complex information about everywhere you go in a file, locations.txt. And you end up creating an app called LocoList, that, provided there’s a Maps app on your phone, looks for this locations.txt file and shows all the places recorded in that file in a simple list. It works flawlessly.

Now, let’s just say that developers of the Maps app realise it’s a better idea to store all your location information somewhere else than in that locations.txt file, and updates the app so that it no longer creates or stores that file on your phone. And now LocoList can’t seem to find that locations.txt file it depended on for all its data, and there’s no other way it can extract that information from the Maps app either. LocoList no longer works now. You’re screwed.

All your work on LocoList has gone into the trash because a change was made to Maps that broke your app. And while it might not seem a big deal here, on a huge service like Netflix the entire application going down because a change was made to one part of it can not only ruin the experience for users, it also means that all other parts of the application have to be rewritten to accommodate that one tiny change you made to one part of the app. Such a structure is what we call a monolithic architecture.

Netflix literally ushered in a revolution around ten years ago by rewriting the applications that run the entire service to fit into a microservices architecture — which means that each application, or microservice’s code and resources are its very own. It will not share any of it with any other app by nature. And when two applications do need to talk to each other, they use an application programming interface (API) — a tightly-controlled set of rules that both programs can handle. Developers can now make many changes, small or huge, to each application as long as they ensure that it plays well with the API. And since the one program knows the other’s API properly, no change will break the exchange of information.

Netflix estimates that it uses around 700 microservices to control each of the many parts of what makes up the entire Netflix service: one microservice stores what all shows you watched, one deducts the monthly fee from your credit card, one provides your device with the correct video files that it can play, one takes a look at your watching history and uses algorithms to guess a list of movies that you will like, and one will provide the names and images of these movies to be shown in a list on the main menu. And that’s the tip of the iceberg. Netflix engineers can make changes to any part of the application and can introduce new changes rapidly while ensuring that nothing else in the entire service breaks down.

To conclude, why does a microservices architecture matter so much to Netflix? Well, this is what they achieved with just choosing that:

Where do they run all of these microservices, though?

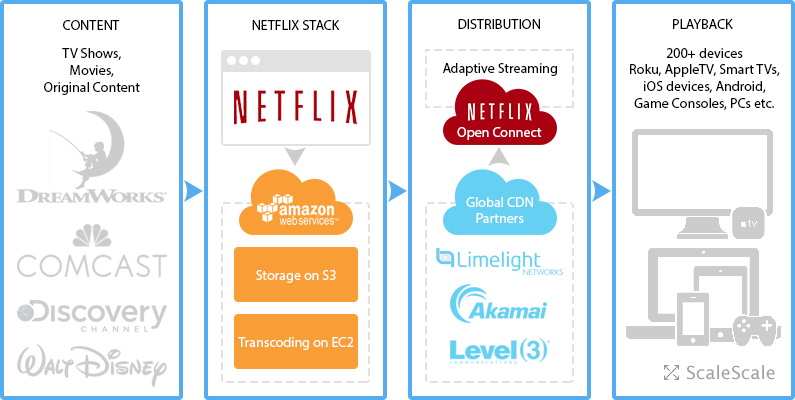

To run all of this you need to have a massive network of computer servers, which Netflix once owned on their own, but they realised that the breakneck pace that they grew at — and needed to continue doing so — was difficult if they spent their time building computer systems that can support their software and keep fixing and modifying them to fit their needs. They made a courageous decision to get rid of maintaining their own servers and move all of their stuff to the cloud — i.e. run everything on the servers of someone else who dealt with maintaining the hardware while Netflix engineers wrote hundreds of programs and deployed it on the servers rapidly. The someone else they chose for their cloud-based infrastructure is Amazon Web Services (AWS).

Wait. Amazon? The folks who also happen to run that Prime Video thingy? How can Netflix trust everything they have to an arch-rival?

Well, a lot of businesses follow a gentleman’s agreement of sorts where they work for each other despite competing in the same categories — a good example being how Samsung competes with Apple in phones and at the same time the iPhone’s major parts are all manufactured by the Korean giant. Netflix was an AWS customer before Prime Video turned up, but this does not mean they will be hostile towards each other.

Turns out that Netflix and Amazon’s partnership turned out to be a huge win-win situation for both companies. Netflix turned out to be AWS’s most advanced customers, pushing all of their capabilities to the maximum and constantly innovating upon how they can use the different servers AWS provided for various purposes — to run microservices, to store movies, to handle internet traffic — to their own leverage. AWS in turn improved their systems to allow Netflix to take massive loads on their servers, as well as make their use of different AWS products more flexible, and used the expertise gained to serve the needs of thousands of other corporate customers. AWS proudly touts Netflix as it’s top customer, and Netflix can rapidly improve their services and yet keep it stable because of AWS. Even if Netflix takes away Prime Video’s popularity, or vice versa.

From reel to screen — a long journey

What good would be any TV/movie service without, of course, TV shows/movies to watch? For Netflix, getting them from the film producer to the customer is a long and arduous process:

- If it’s a show/movie Netflix doesn’t produce by itself, (i.e. not a Netflix Original) they have to negotiate for broadcast rights with the companies tasked with distributing films or TV shows. This means paying a large sum of money to get the legal right to broadcast a movie or TV show to customers in various regions around the world. And often it might be that the distribution company (or even Netflix itself) might have signed exclusive deals with other video services or TV channels for some regions, which means Netflix might not be able to provide some shows to customers there, or at a later date — for example, this led to House of Cards' season 5 premiere in the Middle East being horribly delayed to June 30, a full month later compared to the 150+ countries who got it on May 30. They even got Underwood’s Chief of Staff to explain this in a humorous (English) video:

- Store the original digital copy of the show or movie on to their AWS servers. The original copies are usually in high-quality cinema standards, and Netflix will have to process these before anybody can watch it.

- Netflix works on thousands of devices, and each of them play a different format of video and sound files. Another set of AWS servers take this original film file, and convert it into hundreds of files, each meant to play the entire show or film on a particular type of device and a particular screen size or video quality. One file will work exclusively on the iPad, one on a full HD Android phone, one on a Sony TV that can play 4K video and Dolby sound, one on a Windows computer, and so on. Even more of these files can be made with varying video qualities so that they are easier to load on a poor network connection. This is a process known as transcoding. A special piece of code is also added to these files to lock them with what is called digital rights management or DRM — a technological measure which prevents piracy of films.

- The Netflix app or website determines what particular device you are using to watch, and fetches the exact file for that show meant to specially play on your particular device, with a particular video quality based on how fast your internet is at that moment.

The last part about fetching is the one that is most crucial for Netflix here, because after all, that is where the Internet network delivers the video from Netflix’s AWS servers to the customer’s device. If it’s poorly managed or ignored, it means a really slow or unusable Netflix and virtually the end for the company. The internet is the umbilical cord that connects Netflix to its customers, and it takes a lot for them to deliver the content a user wants, in the shortest time possible. On a really crowded network where millions of services compete for space.

Racing against buffer time

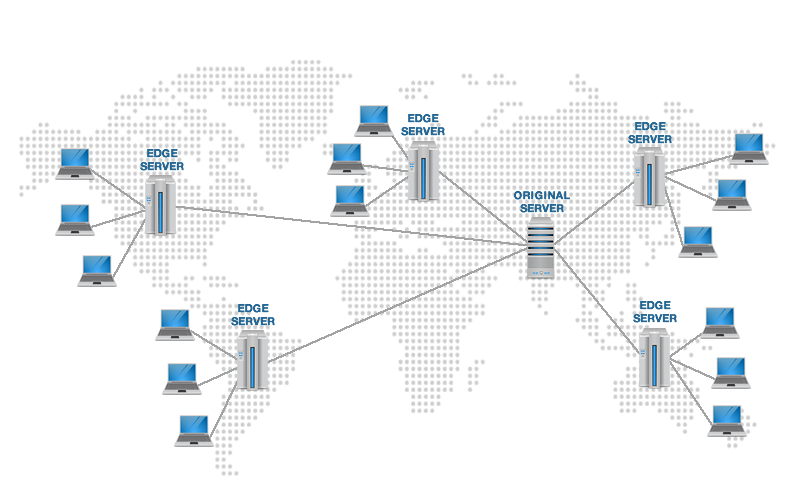

The entire gamut of operations that build up the Netflix ecosystem — software, content, and technology — is rendered useless if the end user’s internet connection is too poor to handle the video quality. Here’s how basically everything on the internet works: when you do something that requires net access, a request is sent to your internet service provider (ISP). The ISP forwards it to the dedicated servers that handle the website, and the servers provide a response which is relayed back to your computer and forms the result. For Netflix and other top-tier sites, where millions of hours of video content is relayed across the internet between their servers and all the users, a much larger network of servers is needed to maintain performance. They do this by building something called a Content Delivery Network (CDN).

What CDNs basically do is, they take the original website and the media content it contains, and copy it across hundreds of servers spread all over the world. So when, say, you log in from Budapest, instead of connecting to the main Netflix server in the United States it will load a ditto copy of it from a CDN server that is the closest to Budapest. This greatly reduces the latency — the time taken between a request and a response, and everything loads really fast. CDNs are the reason why websites with a huge number of users like Google, Facebook, or YouTube manage to load really fast irrespective of where you are or what the Internet speed is like.

Netflix earlier used a variety of CDN networks — operated by giants such as Akamai, Level 3 and Limelight Networks to deliver their content. But a growing user base means they must deliver higher number of content at more locations while lowering costs — and this led them to build their own CDN, called Open Connect.

Here, instead of relying on AWS servers, they install their very own around the world. But it has only one purpose — to store content smartly and deliver it to users. Netflix strikes deals with internet service providers and provides them the red box you saw above at no cost. ISPs install these along with their servers. These Open Connect boxes download the Netflix library for their region from the main servers in the US — if there are multiple of them, each will rather store content that is more popular with Netflix users in a region to prioritise speed. So a rarely watched film might take time to load more than a Stranger Things episode. Now, when you will connect to Netflix, the closest Open Connect box to you will deliver the content you need, thus videos load faster than if your Netflix app tried to load it from the main servers in the US.

Think of it as hard drives around the world storing videos, and the closer they are, the faster you can get to them and load up the video. There is a lot more trickery that goes on behind the scenes: as this interview explains, whenever you hit play on a show, Netflix will locate the 10 closest Open Connect boxes that have the show loaded on them. Your Netflix app/site will then try to detect which one of them is the closest or works fastest on your internet connection, and then load video from there. This is why videos start out blurry but then suddenly sharpen up — that is Netflix switching servers till it connects to the one that will give you the highest quality of video.

In a nutshell….

This is what happens when you hit that Play button:

- Hundreds of microservices, or tiny independent programs, work together to make one large Netflix service.

- Content legally acquired or licensed is converted into a size that fits your screen, and protected from being copied.

- Servers across the world make a copy of it and store it so that the closest one to you delivers it at max quality and speed.

- When you select a show, your Netflix app cherry picks which of these servers will it load the video from.

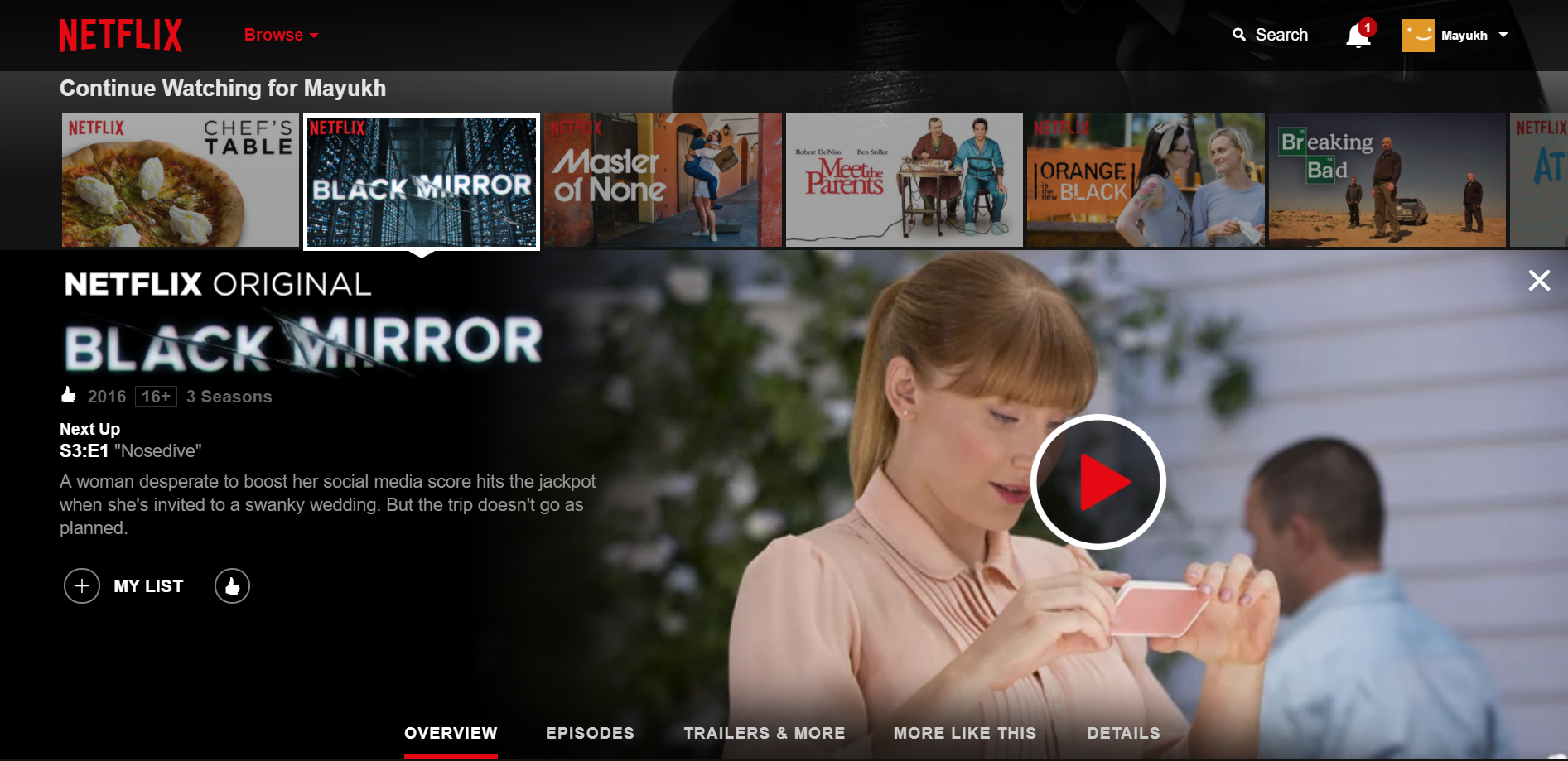

- You are now gripped by Frank Underwood’s chilling tactics, given depression by BoJack Horseman’s rollercoaster life, tickled by Dev in Master of None and made phobic to the future of technology by the stories in Black Mirror. And your lifespan decreases as your binge watching turns you into a couch potato.

It looked so simple before, right?

[Update (November 21, 2017): An image on this article was mistakenly attributed to be that of an Amazon Web Services data center. I am grateful to the kind folks at Amazon Web Services in Germany for reaching out personally and alerting me of this error.]